In the last few years, generative neural networks have overcome an important stage in their development, becoming more powerful and capable of creating only images, but also videos based on text descriptions. Microsoft's new VASA-1 algorithm will probably surprise many because it doesn't require any description to work.

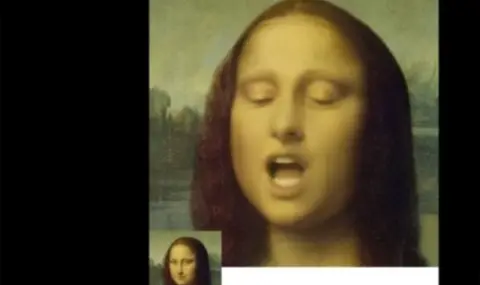

It is enough to provide one image of a person and an audio recording, based on which the neural network will generate a video of a speaking person with a wide range of emotions and natural facial expressions.

The VASA-1 result looks very natural and believable. From just one photo of a face and a voice recording, the algorithm creates a realistic video in which the person in the photo literally “comes to life”, and his facial expression, lip movements and head look completely natural.

Since videos created using VASA-1 are difficult to immediately distinguish from the real thing, there are already concerns that the algorithm could be used to create fakes.

As for the neural network itself, its main difference from other similar algorithms is the presence of a holistic model for generating facial expressions and head movements. Microsoft is conducting a thorough investigation, including evaluating a number of new metrics. As a result, it becomes clear that the new algorithm significantly surpasses the previously presented analogues in many respects.

„Our method not only generates high-quality video with realistic facial expressions and head movements, but also supports online video generation of 512x512 pixels at 40 frames per second with low initial latency. This paves the way for real-time interactions with lifelike avatars that mimic human conversational behavior," Microsoft said in a statement.

In other words, the neural network can create high-quality fake videos based on just one image. So it's no surprise that Microsoft calls VASA-1 a “research demonstration“ and has no plans to bring it to market, at least not anytime soon. See more.